A newly identified, large-scale cybercrime syndicate is systematically compromising vulnerable Large Language Model (LLM) service endpoints to establish and monetize unauthorized access to critical artificial intelligence infrastructure, marking a significant escalation in AI-related threat vectors. This sophisticated campaign, dubbed "Bizarre Bazaar" by security researchers, represents a pioneering instance of "LLMjacking" where threat actors exploit misconfigured or poorly authenticated AI systems for commercial gain, exposing organizations to unprecedented financial, data, and operational risks.

Over an intense forty-day observation period, security experts at Pillar Security documented an alarming surge of over 35,000 distinct attack sessions targeting their specialized honeypot network. This extensive telemetry provided crucial insights into a meticulously orchestrated criminal enterprise designed to exploit and commercialize illicit access to AI computational resources. The sheer volume and sustained nature of these attacks underscore a dedicated effort by a specific threat actor group to establish a lucrative black market for compromised AI capabilities. This operation distinguishes itself by actively monetizing access, moving beyond mere reconnaissance or initial intrusion attempts to build a full-fledged illicit service.

The primary objective of the Bizarre Bazaar operation revolves around the unauthorized leveraging of weakly protected LLM infrastructure. This exploitation encompasses several critical activities, including the surreptitious creation of malicious or fraudulent AI models, the exfiltration of sensitive organizational data processed or stored within these systems, the deliberate disruption of legitimate AI services, and the unauthorized consumption of valuable computational resources, leading to substantial financial burdens for the victims. These activities highlight a multi-faceted threat model where attackers seek both direct financial profit and strategic advantage through data theft and service degradation.

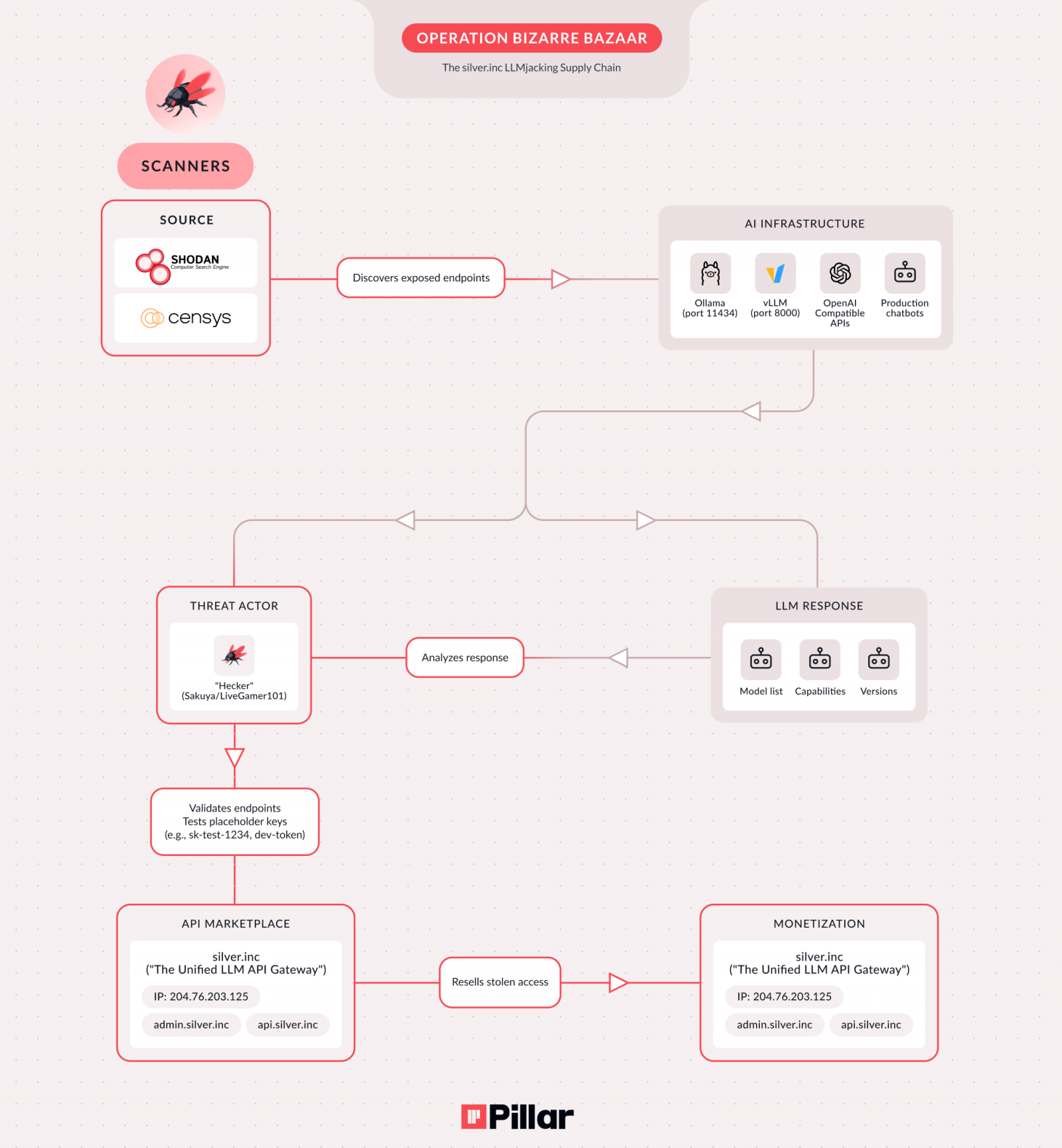

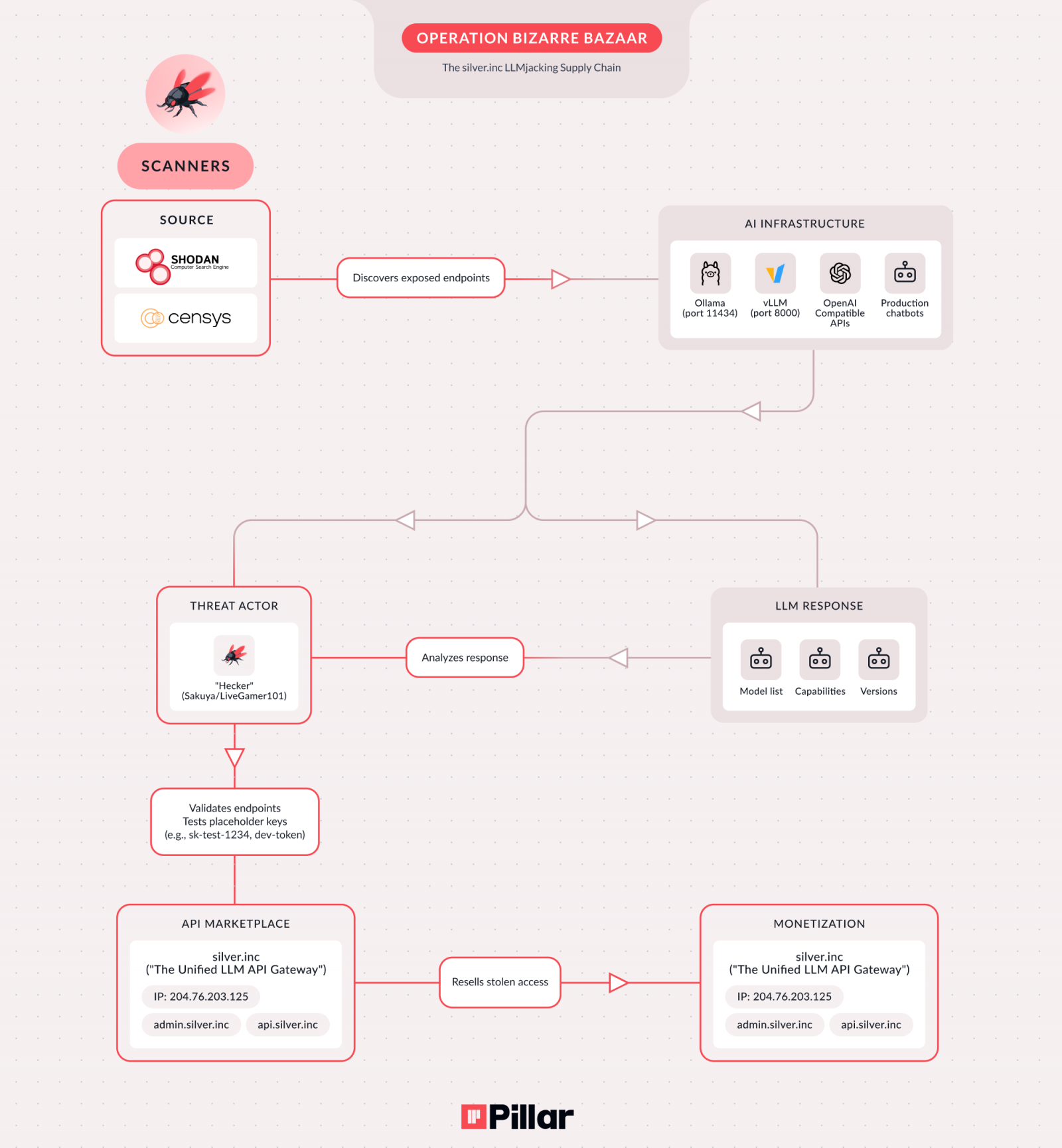

Attackers employ a variety of common vectors to identify and infiltrate susceptible AI environments. These frequently include self-hosted LLM deployments that lack robust security configurations, publicly exposed or unauthenticated AI APIs, accessible Model Context Protocol (MCP) servers, and development or staging AI environments that are inadvertently exposed to the public internet through assigned IP addresses. The rapid exploitation cycle is particularly concerning; researchers noted that attacks typically commence within mere hours of a misconfigured endpoint being indexed by internet scanning services like Shodan or Censys. This immediate response time indicates automated reconnaissance capabilities and an agile threat response mechanism.

Specific misconfigurations are routinely exploited. Unauthenticated Ollama endpoints, often found running on port 11434, represent a prevalent vulnerability. Similarly, OpenAI-compatible APIs operating on port 8000 without proper authentication mechanisms are prime targets. The presence of unauthenticated production chatbots also offers a straightforward entry point for threat actors. The ease with which these vulnerabilities are discovered and exploited underscores a critical gap in current AI deployment security practices, often prioritizing functionality and speed over robust access controls.

The implications of such compromises extend far beyond traditional API abuse. As Pillar Security meticulously details, compromised LLM endpoints can accrue significant, unforeseen costs due to the expensive nature of inference requests and computational resource consumption. Furthermore, the potential for exposing highly sensitive organizational data is immense, ranging from proprietary algorithms and training data to confidential user interactions and business intelligence. Perhaps most critically, successful LLMjacking attacks can provide threat actors with crucial lateral movement opportunities within an organization’s broader network, potentially granting access to other critical systems, cloud environments, and sensitive data repositories. This capability transforms an AI-specific compromise into a potential enterprise-wide breach.

Adding context to the evolving threat landscape, an earlier report from GreyNoise had indicated similar activity, where attackers primarily targeted commercial LLM services for enumeration purposes. While GreyNoise’s findings suggested a focus on discovery, Pillar Security’s investigation into Bizarre Bazaar reveals a more advanced stage of criminal activity: the systematic monetization of these vulnerabilities. This progression from reconnaissance to commercial exploitation signifies a maturing ecosystem for AI-focused cybercrime.

Pillar Security’s analysis reveals a sophisticated criminal supply chain, indicating a collaborative effort among at least three distinct threat actor roles operating as part of the same overarching campaign. The first actor leverages automated bots to continuously scan the vast expanse of the internet for exposed LLM and MCP endpoints, acting as the initial reconnaissance layer. The second actor is responsible for validating these findings, meticulously testing access to confirm the viability of the identified vulnerabilities. The third and final actor in this chain operates a dedicated commercial service, prominently marketed under the domain "silver[.]inc" and actively promoted across clandestine channels on platforms like Telegram and Discord. This entity serves as the marketplace for reselling illicit access, accepting payments in various cryptocurrencies or via PayPal, demonstrating a well-established financial infrastructure.

The SilverInc platform actively promotes a project named "NeXeonAI," which is advertised as a "unified AI infrastructure" offering unauthorized access to a diverse portfolio of over fifty different AI models from leading providers. This illicit marketplace provides a direct conduit for other malicious actors to leverage compromised AI resources without the need for their own reconnaissance or exploitation capabilities, effectively democratizing access to stolen AI computational power and data. Researchers have also attributed leadership of this operation to a specific individual or group using various aliases, including "Hecker," "Sakuya," and "LiveGamer101," further solidifying the identification of a dedicated threat actor behind Bizarre Bazaar.

While the Bizarre Bazaar operation primarily focuses on LLM API abuse, Pillar Security’s findings also highlight a parallel, distinct campaign concentrating on MCP endpoint reconnaissance. This separate targeting, though currently unlinked to Bizarre Bazaar, presents an even graver threat. Exploiting MCP endpoints offers significantly broader opportunities for lateral movement within a compromised network, enabling interactions with Kubernetes clusters, access to cloud services, and the execution of arbitrary shell commands. Such capabilities are often far more valuable to threat actors than merely monetizing resource consumption, potentially leading to full system compromise and deeper organizational infiltration. The potential for a future convergence or collaboration between these two campaigns represents a critical concern for cybersecurity professionals.

As of the current assessment, the Bizarre Bazaar campaign remains fully operational, with the SilverInc service actively engaged in its illicit trade. This ongoing threat underscores the urgent need for organizations deploying and utilizing AI technologies to reassess and significantly bolster their security postures.

Implications and Future Outlook

The Bizarre Bazaar operation serves as a stark warning of the burgeoning threat landscape surrounding artificial intelligence. The financial ramifications of LLMjacking are immediate and substantial, as victims bear the cost of unauthorized inference requests and resource consumption. However, the long-term consequences are far more severe, encompassing potential intellectual property theft, compromise of sensitive customer or proprietary data, reputational damage, and the erosion of trust in AI systems. The ability of attackers to generate malicious models or manipulate existing ones also opens doors to new forms of fraud, misinformation, and even targeted attacks against individuals or organizations.

To mitigate these escalating risks, organizations must adopt a proactive and multi-layered security strategy for their AI infrastructure. Key preventative measures include:

- Robust Authentication and Authorization: Implementing strong, multi-factor authentication (MFA) for all AI endpoints and APIs, coupled with granular role-based access control (RBAC) to ensure only authorized users and services can interact with LLMs and MCPs.

- Network Segmentation: Isolating AI infrastructure within segmented network zones, restricting direct public internet access, and employing firewalls to control inbound and outbound traffic. Development and staging environments should never be publicly exposed.

- Secure Configuration Management: Adhering to secure configuration best practices for all LLM platforms, frameworks (e.g., Ollama), and related services. Regularly auditing configurations for missteps like unauthenticated access.

- API Security Gateways: Utilizing API gateways to enforce security policies, rate limiting, and input validation for all AI API interactions, thereby protecting against common API abuse techniques.

- Continuous Monitoring and Threat Detection: Implementing comprehensive logging and monitoring solutions to detect anomalous activity, unauthorized access attempts, and unusual resource consumption patterns. AI-specific threat detection tools can provide valuable insights.

- Vulnerability Management: Regularly scanning for vulnerabilities in AI infrastructure, libraries, and dependencies, and promptly patching identified weaknesses.

- Data Governance and Encryption: Encrypting sensitive data at rest and in transit within AI systems and implementing robust data governance policies to minimize the exposure of confidential information.

- Incident Response Planning: Developing and regularly testing an incident response plan tailored to AI-related breaches, ensuring rapid detection, containment, eradication, and recovery.

The professionalization of AI-focused cybercrime, as exemplified by the Bizarre Bazaar operation, signals a new era in cybersecurity. As AI technologies become more pervasive across industries, the attack surface will continue to expand, attracting increasingly sophisticated threat actors. The evolution from simple enumeration to organized commercial exploitation underscores the critical need for organizations to integrate AI security as a foundational component of their overall cybersecurity strategy, moving beyond traditional security paradigms to address the unique challenges presented by intelligent systems. Failure to do so risks not only financial loss but also the integrity and trustworthiness of the AI systems that underpin modern enterprises.