OpenAI is implementing a pivotal enhancement to its ChatGPT platform, introducing a substantial upgrade to its temporary chat feature that allows for the integration of personalized user settings without compromising the core principle of isolating these interactions from the user’s permanent account history and model training data. This strategic development represents a nuanced approach to balancing user convenience with data privacy, offering a more tailored conversational experience even within the confines of a transient session. The update is poised to redefine how users engage with the AI for short-term, sensitive, or experimental queries, providing a richer interaction while preserving the distinct "clean slate" characteristic of temporary chats.

The concept of a "temporary chat" or "incognito mode" within AI conversational agents was initially conceived to address several critical user concerns. Foremost among these was privacy; users sought a mechanism to interact with the AI without their queries, responses, or the session’s content being stored in their persistent chat history. This ensured that sensitive discussions, fleeting ideas, or experimental prompts would not linger, providing a sense of digital ephemerality. Furthermore, temporary chats served as a safeguard against unintentional influence on the AI’s long-term memory or its training datasets. By preventing these sessions from being used to refine the underlying models, OpenAI aimed to offer a truly isolated environment, assuaging fears that casual or exploratory interactions might inadvertently shape future AI behavior or surface in subsequent, unrelated conversations. In its original iteration, a temporary chat was a completely blank canvas, devoid of any past context, custom instructions, or user-specific stylistic preferences, offering a truly fresh, uninfluenced interaction. This design, while robust in its privacy guarantees, often resulted in a somewhat generic and less efficient exchange for users accustomed to their personalized AI assistant.

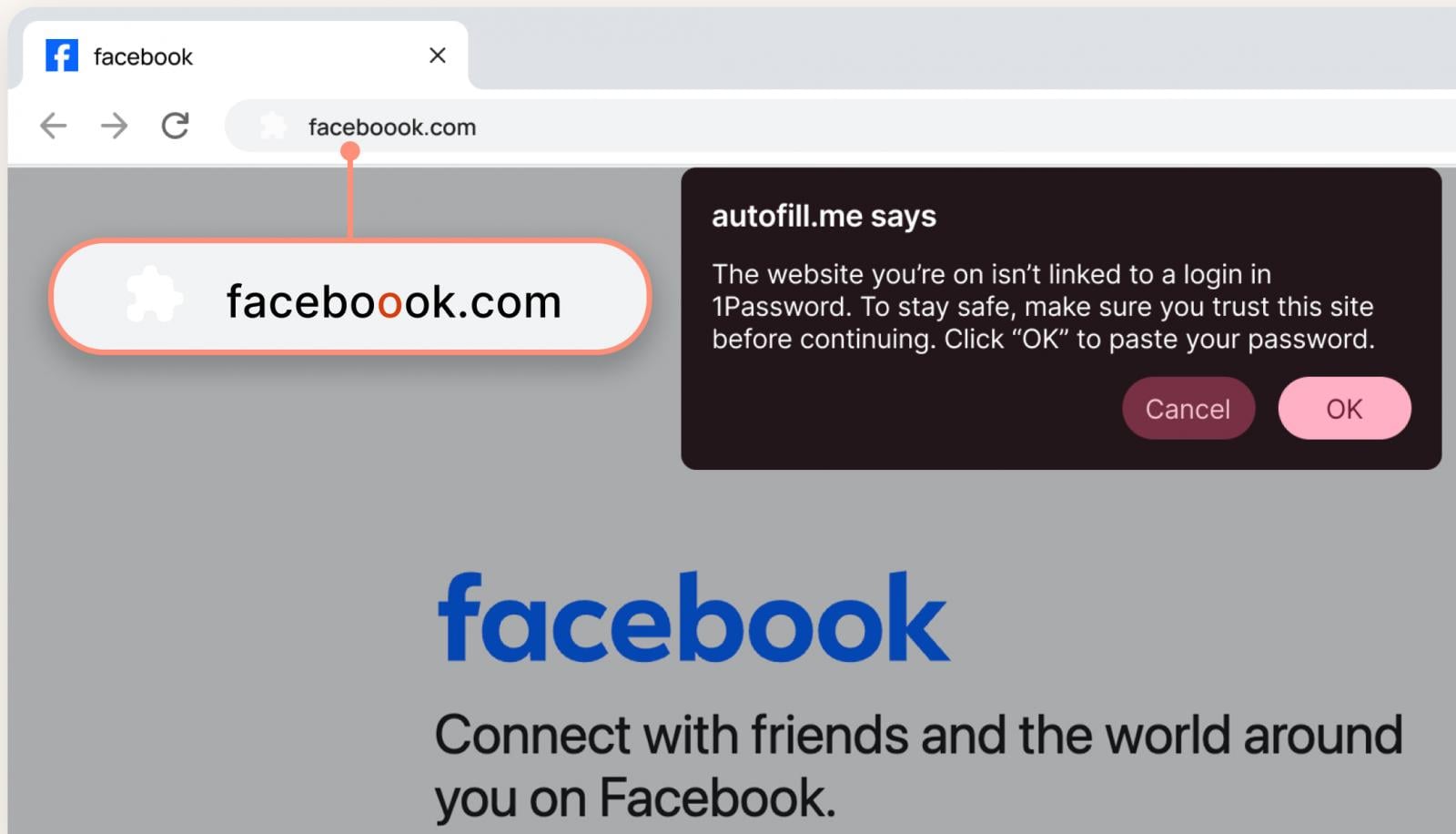

The recent upgrade fundamentally redefines this dynamic by introducing a sophisticated layer of personalization into these ephemeral sessions. While the chat itself remains temporary—meaning it will not be saved in the user’s history nor used for model training—it will now intelligently leverage the user’s established memory, chat history patterns, and explicit style and tone preferences. This means that if a user has configured custom instructions for ChatGPT, dictating specific response formats, desired persona traits, or topics to avoid, these directives will now be respected even in a temporary chat. The AI will adapt its output to align with the user’s preferred communication style, memory context, and pre-set parameters, creating a more cohesive and natural interaction. This strategic integration is designed to bridge the gap between absolute privacy and contextual relevance, allowing users to maintain their unique conversational identity even when operating in a "do not remember" mode. The activation of this enhanced feature is intuitive, requiring users to simply select the "Temporary" option when initiating a new chat, with the added capability to toggle off the personalization aspect if a completely uninfluenced interaction is desired.

The strategic rationale behind this update is multifaceted. From a user experience perspective, it addresses a common friction point where users had to choose between the privacy of a temporary chat and the efficiency of a personalized one. Prior to this enhancement, engaging in a temporary session often meant sacrificing the convenience of having the AI understand and adapt to one’s unique needs, leading to repetitive clarifications or less-than-optimal responses. By allowing personalization to persist, OpenAI is significantly improving the utility of temporary chats, making them more appealing for a wider range of scenarios. For instance, a user might need to quickly draft a document in a specific tone for a client, but does not want that specific interaction influencing their general AI persona or showing up in their main history. The upgraded temporary chat now seamlessly accommodates this, providing the desired output tailored to their established preferences without any permanent data footprint.

From a data governance and ethical AI standpoint, this update showcases OpenAI’s ongoing commitment to user control and responsible AI development. The challenge lies in enabling a richer, personalized experience without undermining the core privacy promise of temporary sessions. The solution appears to involve a sophisticated system where user preferences (custom instructions, stylistic tendencies, relevant memory snippets) are temporarily loaded and applied to the current session, but the session’s content itself is strictly prevented from being logged or fed back into the persistent user profile or training data. This selective application of user data represents a complex engineering feat, requiring robust data isolation mechanisms to ensure that the "temporary" nature of the chat is upheld, even as it becomes more intelligent and context-aware.

However, a crucial caveat remains regarding data retention for safety purposes. OpenAI maintains that for certain safety reasons, a copy of the temporary chat may still be retained for up to 30 days. This policy is a standard practice across many digital platforms, designed to facilitate investigations into potential misuse, policy violations, or to address critical security concerns. While the chat content is explicitly excluded from influencing the user’s account or model training, this short-term retention underscores the complex interplay between user privacy, platform security, and regulatory compliance in the rapidly evolving AI landscape. It highlights the inherent challenges faced by AI developers in creating truly anonymous interaction environments while simultaneously upholding safety standards and legal obligations.

This enhancement to temporary chats is not an isolated development but rather part of a broader trend within OpenAI to empower users with greater control and a more tailored AI experience. Alongside features like "Custom Instructions" and "Memory," which allow users to define persistent preferences and context for their AI interactions, the refined temporary chat further diversifies the ways individuals can interface with the AI. These advancements collectively aim to transform ChatGPT from a generic conversational agent into a highly adaptable and personalized digital assistant, capable of seamlessly integrating into diverse workflows while respecting user-defined boundaries regarding data persistence and privacy.

In parallel with these personalization efforts, OpenAI has also been actively rolling out other significant improvements focused on user management and responsible AI deployment. A notable example is the introduction of an age prediction model for ChatGPT. This sophisticated system is designed to infer a user’s age based on their conversational patterns, linguistic nuances, and overall interaction behavior. The primary objective of this model is to enhance child safety and ensure age-appropriate content delivery, particularly by restricting access to certain sensitive topics for younger users. For instance, accounts identified as belonging to minors may have limitations on discussions related to violence, self-harm, or other potentially harmful content, including viral online challenges.

The implementation of such an age prediction model, while well-intentioned, introduces its own set of complexities and challenges. OpenAI itself acknowledges that the AI model is not infallible. There is a recognized potential for misidentification, where adult users might be incorrectly flagged as teenagers, leading to unwarranted account restrictions. Conversely, tech-savvy teenagers exhibiting mature conversational patterns could potentially bypass these restrictions. To mitigate the impact of erroneous age predictions, OpenAI has established clear recourse mechanisms, allowing adult users who have been incorrectly restricted to verify their age and regain full access to the platform’s capabilities. This highlights the continuous effort required in developing and refining AI systems that can accurately and fairly manage user demographics while upholding ethical principles and providing a robust user experience.

The evolution of ChatGPT’s temporary chat feature, alongside advancements in user-specific memory and age-gating mechanisms, signals a maturing phase in AI development. The industry is moving beyond mere functional capabilities towards a more holistic understanding of user needs, encompassing personalization, privacy, safety, and control. These updates reflect a strategic pivot towards creating more intelligent, adaptable, and ethically responsible AI systems that can cater to a diverse user base while navigating the complex landscape of digital interaction. The implications extend beyond individual user convenience, pointing towards a future where AI assistants are not just powerful tools, but trusted digital companions, whose interactions are carefully managed to align with individual preferences and societal standards for safety and privacy. This ongoing refinement of user interaction paradigms is critical for fostering broader adoption and trust in AI technologies across various domains.