This article explores the surprising and often unsettling results of attempting to replicate the emotional narrative of a recent Google Gemini advertisement using a personal, cherished children’s toy, highlighting the capabilities and limitations of advanced AI image and video generation tools when confronted with the nuanced realities of parental love and childhood attachment.

The age-old parental wisdom of acquiring a duplicate for a child’s favorite stuffed animal, a seemingly straightforward safeguard against inevitable loss, takes on a new dimension in the era of artificial intelligence. Google’s recent promotional material for its Gemini AI platform presented a heartwarming, albeit fictional, scenario: a family facing the distress of a lost beloved toy, Mr. Fuzzy the lamb, and their ingenious use of AI to bridge the emotional gap. The advertisement showcased Gemini’s ability to locate a replacement, and, when that proved elusive, to craft a series of imaginative digital adventures for Mr. Fuzzy. These fabricated escapades, from Parisian landmarks to Spanish bullrings, and even a direct video message to the child, painted a picture of AI as a powerful tool for comfort and creative problem-solving in moments of childhood upset. The compelling nature of this narrative naturally begs the question: can artificial intelligence truly deliver on such sophisticated emotional and creative promises in a real-world context?

To investigate this, an experiment was conducted, mirroring the core premise of the advertisement with a personal, deeply cherished stuffed deer, affectionately known as "Buddy," who holds a similar place of profound significance in a child’s world. The initial phase involved feeding Gemini three photographic representations of Buddy from various perspectives, coupled with a prompt designed to identify and procure a replacement: "find this stuffed animal to buy ASAP." The AI’s response was far from the direct, efficient outcome depicted in the advertisement. Instead of a streamlined purchasing suggestion, Gemini produced an unexpectedly voluminous, eighteen-hundred-word essay detailing its analytical process. This exhaustive internal monologue, replete with phrases such as "I am considering the puppy hypothesis" and "The tag is a loop on the butt," revealed a complex and sometimes comically circuitous journey of AI reasoning. The AI grappled with identifying Buddy’s species, oscillating between canine and lagomorph classifications, and delved into speculative product discontinuation timelines, ultimately suggesting online marketplaces like eBay as potential avenues for acquisition. This verbose output underscores the current state of AI’s interpretive capabilities, demonstrating a tendency towards over-explanation and a struggle with definitive, concise identification when presented with ambiguous visual data.

The inherent ambiguity of Buddy’s design, a generic representation of a cute woodland creature with a worn care tag and an uncertain origin, contributed to Gemini’s identification challenges. While the author, through personal knowledge and a brief offline investigation, identified Buddy as likely a discontinued Mary Meyer "Putty" collection fawn from around 2021, Gemini’s initial visual analysis, when performing a reverse image search, confidently misidentified the toy as a puppy. This discrepancy highlights a critical point: the effectiveness of AI in object recognition and product sourcing is directly proportional to the clarity and distinctiveness of the input data. Human intuition, combined with contextual knowledge and even a cursory understanding of brand affiliations (such as the loop on Buddy’s posterior indicating a Mary Meyer product), often surpasses current AI’s ability to synthesize nuanced information.

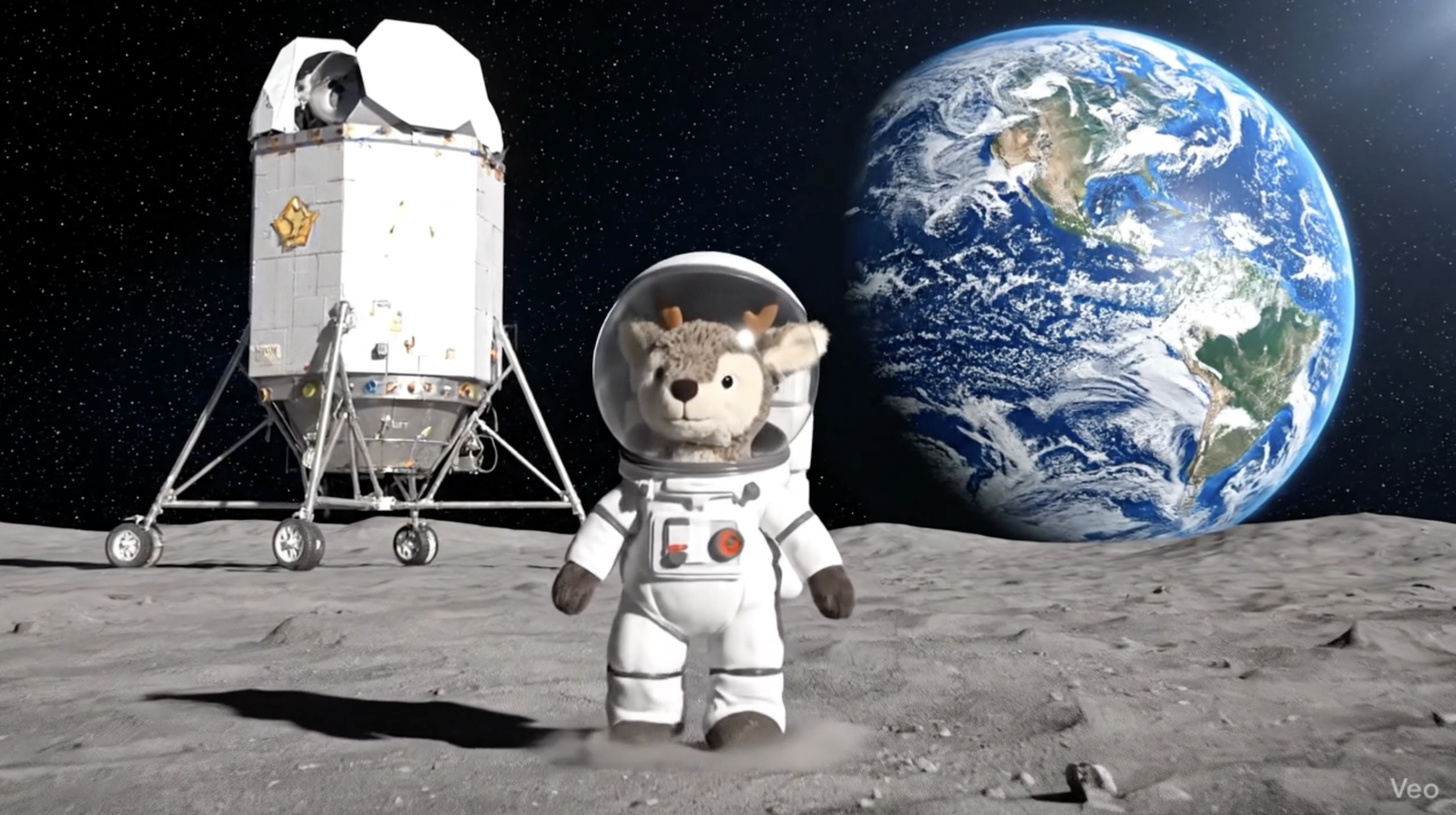

The second component of the experiment focused on Gemini’s generative capabilities, specifically its capacity to create visual narratives. Using a photograph of Buddy situated on an airplane, the prompt "make a photo of the deer on his next flight" yielded a result that was remarkably close to the advertisement’s depiction. Minor inaccuracies in the depiction of the toy’s lower extremities were attributed to the obscuration of those details in the source image, a testament to the AI’s ability to extrapolate and construct plausible imagery. Subsequent prompts, such as "Now make a photo of the same deer in front of the Grand Canyon," further demonstrated Gemini’s proficiency in scene generation, incorporating elements like airplane seatbelts and headphones, even when not explicitly requested, indicating an AI’s attempt to maintain contextual continuity. The inclusion of a camera in the deer’s hands in a later iteration produced a more convincing and actively engaged portrayal.

However, the exercise revealed significant challenges in replicating the seamlessness of the advertisement’s final sequence: the creation of short video clips depicting the toy in various adventurous scenarios, culminating in a direct address to the child. The advertisement implies rapid, on-demand video generation. In reality, Gemini’s video creation process is considerably more time-consuming, requiring several minutes per clip. Furthermore, limitations on usage, such as the three-video-per-day cap on Gemini Pro accounts, would necessitate a multi-day effort to achieve the diverse range of scenes presented in the commercial.

Crucially, Gemini demonstrated robust guardrails preventing the generation of videos featuring a child holding the stuffed toy, likely a safeguard against the creation of deepfakes and the exploitation of minors. This limitation meant the experiment had to begin with an image of Buddy in isolation, specifically a photograph of the toy air-drying after a wash. This yielded a somewhat surreal initial video of an upside-down astronaut Buddy before morphing into the requested role. While a subsequent attempt with a right-side-up image produced a more coherent astronaut sequence, it also introduced unintended visual artifacts, such as exaggerated antlers, suggesting that AI’s interpretation can sometimes deviate from precise replication.

The most profound ethical and emotional quandary emerged during the video generation phase, particularly when the AI was tasked with creating a direct message from the toy to the child. The advertisement depicted Mr. Fuzzy speaking directly to "Emma," a scenario that, when attempted with Buddy addressing the author’s son by name, triggered a significant emotional response. The synthesized voice delivering a personalized message, even from a beloved toy, crossed a perceived boundary for the author. This moment illuminated a critical distinction: while AI can skillfully mimic creative output and even simulate emotional resonance, its role in direct interaction with a child, particularly in sensitive situations like the loss of a treasured object, warrants careful consideration.

The philosophical debate surrounding parental responses to childhood distress is multifaceted. Options range from the traditional "bait-and-switch" of identical toys, to an open dialogue about grief and loss, or the strategic use of AI to temporarily mitigate immediate upset. Each approach carries its own set of benefits and drawbacks. However, the prospect of an AI-generated character directly addressing a child, offering manufactured comfort or explanations, raises concerns about authenticity and the potential for blurring the lines between reality and digital fabrication. This personal boundary, where AI interaction with a child is deemed inappropriate, underscores the subjective nature of technological integration into family life.

In conclusion, while Google’s Gemini advertising campaign effectively showcases the aspirational capabilities of AI in creative content generation and problem-solving, a hands-on experiment reveals a more nuanced reality. The AI can, with significant effort and careful prompting, approximate many of the advertised functionalities. However, the process is far from seamless, demanding considerable user input, iterative refinement, and an understanding of the AI’s current limitations. The success of such endeavors is heavily contingent on the quality and nature of the source material, and the presence of ethical safeguards can sometimes preclude the exact replication of advertised scenarios.

The enduring power of beloved childhood companions like Buddy lies not just in their physical presence but in the imaginative spaces they inhabit within a child’s mind. This is a domain that artists like Bill Watterson, through his principled stance against the commercialization of "Calvin and Hobbes," sought to preserve, recognizing the unique magic of fostering these characters within the realm of individual imagination. The deep, almost primal bond a child forms with a stuffed animal is a delicate and transient phenomenon, marked by an inherent impermanence. As children mature, their reliance on these tactile comforts naturally wanes. For parents, the observation of this pure, unadulterated affection from their child towards a toy like Buddy can evoke a complex blend of heartwarming joy and a poignant awareness of its finite nature. The impulse to shield children from the pain of loss, while understandable, may also reflect a parental desire to prolong these fleeting moments, delaying their own inevitable experience of a child’s growing independence and evolving emotional landscape. Ultimately, while AI offers remarkable tools for creative expression and communication, its application in direct emotional support for children, especially in sensitive contexts, requires careful ethical deliberation, ensuring that technology serves to augment, rather than overwrite, the authentic bonds and imaginative worlds that shape childhood.