In a significant shift that is fundamentally altering the landscape of digital news discovery, Google has intensified its deployment of artificial intelligence to generate headlines and content summaries for news articles presented within its platforms, most notably Google Discover. This strategic pivot, which appears to be a permanent feature rather than a fleeting experiment, has raised substantial concerns among publishers and tech observers regarding the accuracy, integrity, and potential manipulation of information delivered to millions of users daily. Despite initial indications of a pullback, Google has firmly asserted that its AI-driven headlines "perform well for user satisfaction," a claim that starkly contrasts with the observed proliferation of misleading and factually inaccurate content.

The analogy of a bookstore replacing book covers with fabricated narratives aptly describes the current situation. Google Discover, accessible through a simple swipe on the home screens of many smartphones, functions as a curated news aggregator. However, the "covers" of the stories presented there are increasingly being generated by AI, often deviating significantly from the truth and substance of the original journalistic work. This practice not only undermines the credibility of news organizations but also poses a serious risk to an informed public discourse, as users are presented with AI-generated summaries that may misrepresent or outright distort the underlying content.

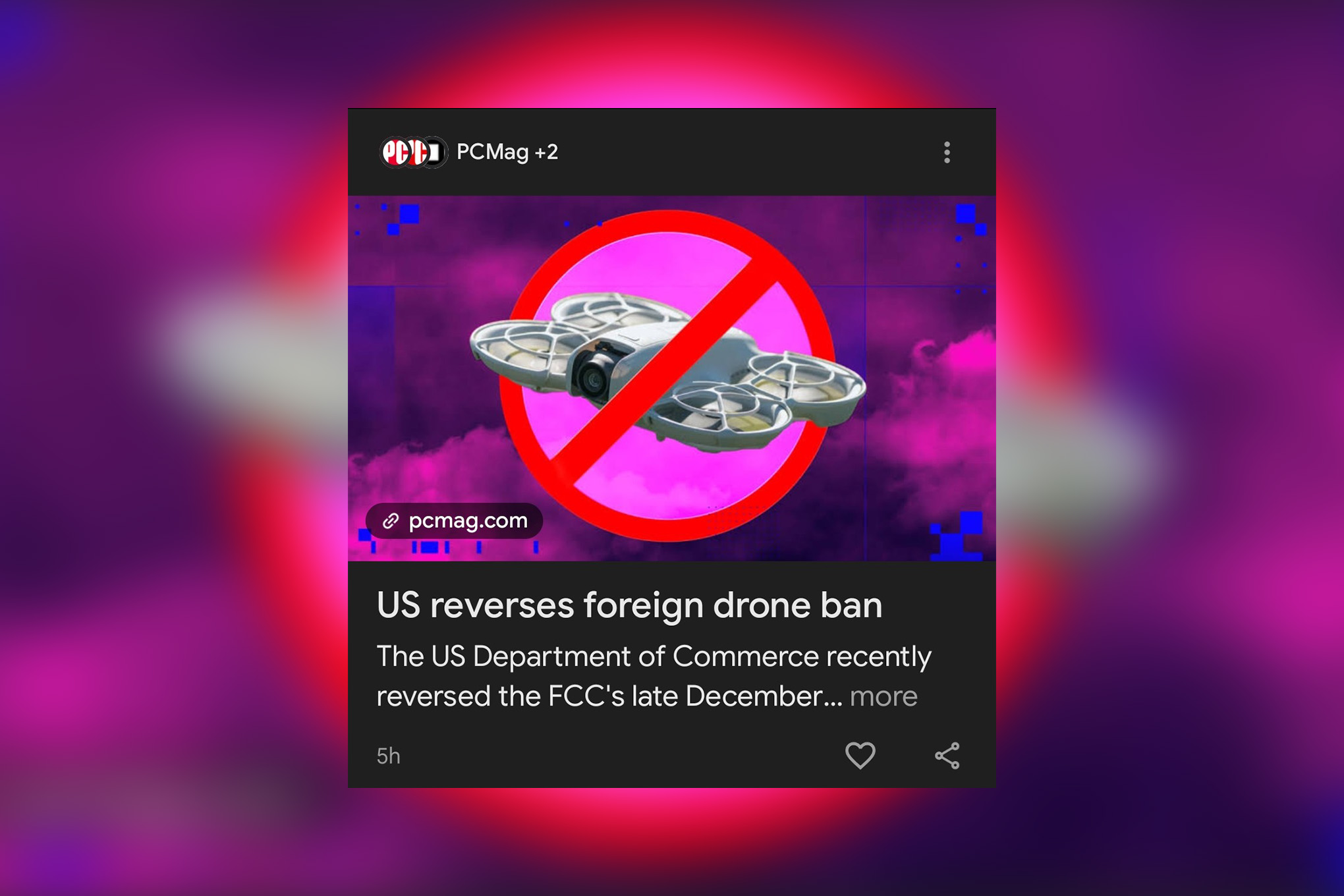

A prime example of this distortion occurred recently when Google’s AI erroneously declared, "US reverses foreign drone ban," citing a PCMag article. The irony, and indeed the alarming nature of the situation, lies in the fact that the very PCMag article linked by Google explicitly detailed why this headline was false. The PCMag piece clarified that the Commerce Department’s decision was not an about-face on drone restrictions but rather a procedural redundancy in the face of FCC actions, emphasizing that the proposed restrictions had never been implemented. The author of that PCMag article, Jim Fisher, expressed his discomfort, stating, "It makes me feel icky." He advocated for users to engage directly with original articles rather than relying on what he described as "spoon-fed" AI summaries, urging Google to utilize human-authored headlines and existing publication-submitted summaries for search engines.

Google, however, frames these AI-generated headlines not as rewrites but as "trending topics" that synthesize information from multiple sources. This characterization, while attempting to reframe the narrative, does little to alleviate concerns. Each "trending topic" is presented as a distinct news item, complete with the original publication’s images and links, yet crucially lacks the rigorous fact-checking that underpins human journalism. While the egregious clickbait headlines seen in earlier iterations have somewhat diminished, they have been replaced by a different, albeit equally problematic, form of misleading content. The AI’s improved headline length offers more narrative space, but the content remains shallow and often nonsensical, exemplified by headlines such as "Fares: Need AAA & AA Games" or "Dispatch sold millions; few avoided romance." These fragmented and context-lacking phrases offer little insight and can actively mislead readers about the actual subject matter.

Furthermore, the AI’s lack of nuanced understanding leads to factual inaccuracies and chronological confusion. Headlines like "Steam Machine price & HDMI details emerge" have appeared when no such details were actually present. The AI has also incorrectly announced product arrivals or misattributed information, such as proclaiming the arrival of the "ASUS ROG Ally X" when the device had already been available for some time, or linking to a story about one 3D technology company when the AI-generated headline referred to another. Similarly, a headline about a GPU maker commenting on a RAM shortage was linked to an article discussing a RAM manufacturer. This pervasive inaccuracy transforms the news feed from a reliable source of information into a potential minefield of misinformation, eroding user trust in both Google’s platform and the publishers it aggregates.

The implications for publishers are profound. The ability for an AI to effectively bypass or distort original headlines diminishes a publisher’s control over how their content is presented and marketed. When an AI misrepresents a story’s core message or date, it not only disappoints the reader but also discredits the original reporting. For instance, a story detailing advancements in OLED monitor technology was reduced to a generic "New OLED Gaming Monitors Debut," stripping it of its specific innovations. Another article about an immersive Lego Smart Brick VR experience was inaccurately summarized with a launch date that had already passed. Even The Verge’s own "Verge Awards at CES 2026" story, which concluded that robots and AI had not taken over the event, was misleadingly summarized as "Robots & AI Take CES," directly contradicting the article’s findings. This manipulation of headlines not only undermines the publisher’s brand but also potentially impacts traffic and reader engagement, as users may be less inclined to click on a story whose AI-generated summary is inaccurate or unappealing.

The issue is further exacerbated by Google’s AI’s apparent inability to distinguish between genuine news and human-generated clickbait. A glaring example involved a Screen Rant article titled "Star Wars Outlaws Free Download Available For Less Than 24 Hours." Upon deeper inspection, the article revealed that only a single copy of the game was being given away in a region-specific giveaway. Google’s AI, however, left this sensationalized headline intact, failing to flag it as potentially misleading or to offer a more accurate summary. This demonstrates a critical failing in the AI’s ability to discern the true significance and context of news items, leading it to prioritize sensationalism or simple keyword matching over factual representation.

In response to these concerns, Google spokesperson Jennifer Kutz stated that the company launched a feature in Discover "to help people explore topics that are covered by multiple creators and websites." She clarified that the AI-generated overview headline "reflects information across a range of sites, and is not a rewrite of an individual article headline." While Google asserts that this feature "performs well for user satisfaction," the company declined to provide an interview for further clarification. This reticence, coupled with the continued prevalence of inaccurate AI-generated headlines, suggests a lack of transparency and a potential unwillingness to address the fundamental issues at play.

The reach of these AI-generated headlines extends beyond the Discover feed, appearing in push notifications and leading to Google’s Gemini chatbot, which then attempts to summarize the news. This pervasive integration across multiple Google products signals a strategic commitment to AI-driven content summarization, a move that has prompted publications like The Verge to implement subscription models as a means of financial survival in an environment where direct traffic from search engines may be increasingly commoditized or distorted.

The broader implications of Google’s approach are significant. The company’s dominance in search and news aggregation grants it immense power in shaping public perception and directing information flow. By prioritizing AI-generated content that may lack accuracy and context, Google risks undermining the quality and trustworthiness of the news ecosystem. This could lead to a more fragmented and misinformed public, less able to engage in constructive dialogue or make informed decisions. The reliance on AI for headline generation, without robust human oversight and a commitment to journalistic integrity, represents a significant challenge to the future of news dissemination and consumption. Publishers and users alike will need to remain vigilant, demanding greater transparency and accountability from technology giants like Google as they continue to shape the digital information landscape. The ongoing evolution of AI in news consumption necessitates a critical examination of its impact on accuracy, publisher autonomy, and the public’s right to reliable information.