The European Union has initiated a formal probe into Elon Musk’s artificial intelligence venture, xAI, citing significant concerns surrounding the potential proliferation of deepfakes and the dissemination of misleading content generated by its AI models. This regulatory action underscores the growing scrutiny faced by advanced AI developers as the bloc endeavors to safeguard its digital ecosystem and uphold democratic values against emerging technological threats.

The European Commission’s Directorate-General for Communications Networks, Content and Technology (DG CONNECT) has formally opened an investigation into xAI, a burgeoning artificial intelligence company founded by technology magnate Elon Musk. The probe centers on the potential risks associated with xAI’s generative AI models, particularly concerning the creation and distribution of deepfakes and other forms of synthetic media that could be used to spread disinformation or manipulate public opinion. This development marks a significant escalation in regulatory oversight for AI developers, signaling the EU’s commitment to proactively addressing the challenges posed by advanced AI technologies.

At the heart of the Commission’s concern lies the potential for xAI’s technology, especially its conversational AI tool Grok, to generate and disseminate content that is deceptive, harmful, or violates existing legal frameworks. The investigation will meticulously examine whether xAI has implemented sufficient safeguards and risk mitigation strategies to prevent the misuse of its AI systems for malicious purposes. This includes assessing the efficacy of measures designed to detect and label AI-generated content, particularly deepfakes, and to prevent the spread of illegal or harmful material.

The EU’s Digital Services Act (DSA) provides the regulatory framework for this investigation. The DSA, which came into effect in February 2024, imposes stringent obligations on online platforms and intermediary services to combat illegal content, protect user rights, and ensure transparency in algorithmic systems. As an AI provider developing advanced generative models, xAI falls under the purview of these regulations, particularly concerning its responsibilities in managing the content produced by its AI. The Commission will be scrutinizing xAI’s compliance with the DSA’s provisions regarding risk assessment and mitigation, transparency, and due diligence.

Background and Context: The Rise of Generative AI and Emerging Risks

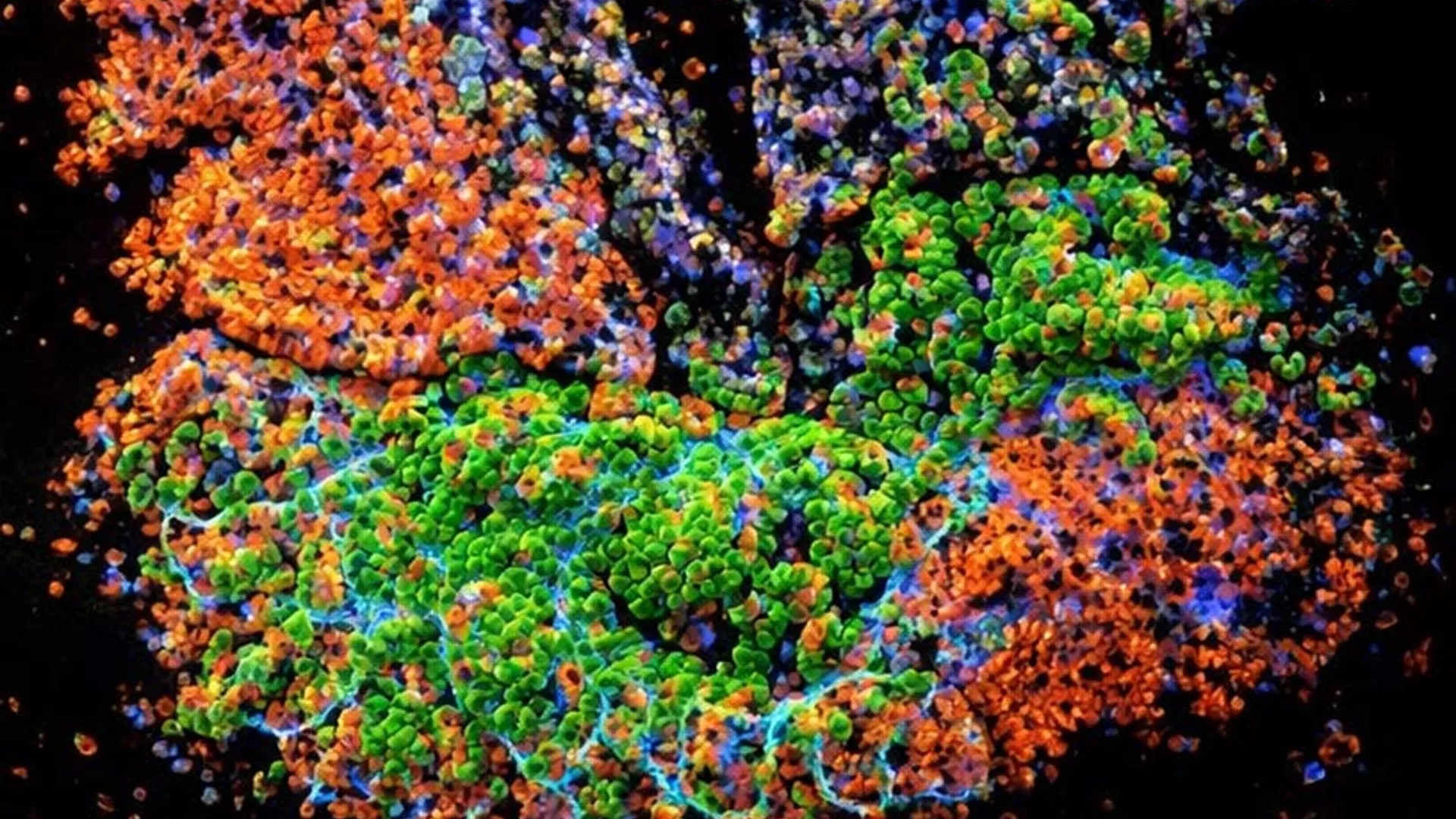

The rapid advancement of generative artificial intelligence has brought forth unprecedented capabilities, enabling machines to create sophisticated text, images, audio, and video that are increasingly indistinguishable from human-generated content. While these technologies offer immense potential for innovation and creativity, they also present profound societal risks. Deepfakes, in particular, have emerged as a significant concern, with the ability to create hyper-realistic fabricated videos or audio recordings that can be used to impersonate individuals, spread false narratives, or incite social unrest.

The proliferation of such synthetic media poses a direct threat to democratic processes, the integrity of public discourse, and individual reputation. Malicious actors could leverage deepfakes to influence elections, destabilize markets, or engage in targeted harassment and defamation. The speed and scale at which AI can generate and distribute such content amplify these risks, making it challenging for traditional content moderation and verification mechanisms to keep pace.

In this evolving landscape, regulatory bodies worldwide are grappling with how to strike a balance between fostering AI innovation and mitigating its potential harms. The European Union has positioned itself as a leader in this regulatory endeavor, with the DSA and the proposed AI Act aiming to establish a comprehensive legal framework for the development and deployment of AI. The investigation into xAI is a tangible manifestation of this proactive approach, signaling that the EU is prepared to hold AI developers accountable for the societal impact of their technologies.

Scope of the Investigation: Deepfakes, Disinformation, and Algorithmic Transparency

The European Commission’s investigation into xAI will likely encompass several key areas:

- Deepfake Detection and Prevention: A primary focus will be on xAI’s capabilities and strategies for identifying and preventing the creation and dissemination of deepfakes. This includes assessing whether the company has implemented robust technical measures to detect synthetic media and whether it has policies in place to prohibit the generation of deceptive or harmful deepfakes. The Commission will also examine the effectiveness of any watermarking or labeling mechanisms designed to distinguish AI-generated content.

- Disinformation and Harmful Content Mitigation: Beyond deepfakes, the investigation will scrutinize xAI’s approach to combating the broader spread of disinformation and other forms of harmful content generated by its AI models. This involves evaluating the company’s content moderation policies, its mechanisms for identifying and flagging problematic content, and its responsiveness to user reports of misuse.

- Algorithmic Transparency and Explainability: The Commission may also delve into the transparency of xAI’s AI models and algorithms. Understanding how these models generate content and what data they are trained on is crucial for assessing potential biases and risks. The investigation could seek to understand the extent to which xAI provides explanations for its AI’s outputs and whether it is transparent about the limitations and potential failure modes of its systems.

- User Safety and Rights Protection: A fundamental aspect of the DSA is the protection of users’ fundamental rights. The investigation will likely assess whether xAI has adequately considered and addressed the potential impact of its AI on user safety, privacy, and freedom of expression, ensuring that its services do not lead to discriminatory outcomes or infringe upon individual rights.

- Compliance with Risk Mitigation Obligations: Under the DSA, providers of online services are required to conduct regular risk assessments and implement appropriate mitigation measures. The Commission will verify whether xAI has fulfilled these obligations concerning the specific risks associated with its generative AI technologies.

Implications for xAI and the Broader AI Industry

The formal probe by the European Commission carries significant implications for xAI. A thorough investigation could lead to substantial regulatory interventions, including potential fines for non-compliance, mandatory changes to product development and deployment practices, or even restrictions on the availability of certain AI features within the EU.

For xAI, this investigation represents a critical juncture. The company, still in its nascent stages, will need to demonstrate a commitment to responsible AI development and a willingness to cooperate with regulatory authorities. Failure to do so could not only result in legal repercussions but also damage its reputation and hinder its ability to operate effectively in a key global market. The company’s response to this inquiry will be closely watched as an indicator of its approach to regulatory compliance and ethical AI deployment.

Beyond xAI, this investigation serves as a potent signal to the entire AI industry. It underscores that the era of relatively unfettered development of powerful AI technologies is drawing to a close, at least within jurisdictions that are proactively implementing robust regulatory frameworks. Other major AI developers, including those working on large language models and generative media tools, will likely face increased scrutiny from regulators worldwide. This probe emphasizes the growing expectation that AI companies must not only innovate but also take significant responsibility for the societal consequences of their creations.

Expert Analysis and Future Outlook

The EU’s action reflects a maturing understanding of the complex interplay between technological advancement and societal well-being. Experts in AI ethics and regulation have long cautioned about the potential for generative AI to be weaponized for malicious purposes. This investigation validates those concerns and highlights the need for a proactive, rather than reactive, approach to AI governance.

"This probe is a clear indication that regulatory bodies are no longer content with observing the rapid evolution of AI from the sidelines," commented Dr. Anya Sharma, a leading AI ethics researcher. "The European Commission is taking a decisive step to ensure that companies developing powerful AI tools are held accountable for potential harms. The focus on deepfakes and disinformation is particularly pertinent, as these are among the most immediate and tangible threats posed by current AI capabilities."

The investigation is also likely to spur further development and refinement of regulatory tools and methodologies for assessing AI risks. As AI technologies continue to advance, regulators will need to adapt and innovate to effectively oversee this dynamic field. This may involve the creation of specialized AI auditing bodies, the development of standardized risk assessment frameworks, and enhanced international cooperation to address cross-border AI challenges.

Looking ahead, the outcome of this investigation could set precedents for how other jurisdictions approach the regulation of generative AI. It reinforces the EU’s ambition to be a global standard-setter in digital regulation, influencing how AI is developed and deployed not only within its borders but also on a global scale. Companies operating in the AI space can anticipate a future characterized by increased transparency requirements, robust risk management obligations, and a greater emphasis on ethical considerations throughout the AI lifecycle. The investigation into xAI is not merely a regulatory action; it is a pivotal moment in the ongoing dialogue about how humanity can harness the transformative power of artificial intelligence responsibly and ethically.